Friday’s Adalytics report about ads being served en masse to known bots leaves plenty of unanswered questions.

But they mainly boil down to this: How could so many different verification providers and ad tech platforms miss something so simple?

AdExchanger discussed the report and its findings with more than a dozen industry sources. The majority requested anonymity in order to speak freely.

All of the sources agree that the type of bot activity analyzed by Adalytics is among the easiest type of invalid traffic to spot because it doesn’t even try to hide its bot status. They offered possible explanations for why these bots seemingly went undetected.

AdExchanger reached out to the tech companies mentioned in the Adalytics report and will update this story with any further comments from those that have yet to respond.

No user agent

According to Adalytics, bots associated with HTTP Archive typically declare themselves as bot user agents when they crawl a website. And these bot user agents are listed on the IAB Tech Lab’s International Spiders and Bots list.

For example, a buyer that uses DoubleVerify’s (DV) verification tools shared log files with AdExchanger from recent ad impressions served to HTTP Archive bots. These log files indicate that the declared user agent for these impressions included the string “PTST,” which is a signifier for HTTP Archive bots.

User agent data can be passed from the SSP to the DSP when the SSP sends a bid request. But the SSP isn’t required to pass the user agent, according to OpenRTB guidelines.

Multiple sources agreed that, if the DSP is unable to provide the user agent data to the buyer’s verification provider, then it could explain why verification platforms are missing these declared bots.

AdExchanger Daily

Get our editors’ roundup delivered to your inbox every weekday.

Daily Roundup

For example, a buy-side source claimed that The Trade Desk’s API does not contain functionality for excluding or including an ad impression in real time based on the user agent.

However, multiple buy-side sources shared screenshots from The Trade Desk’s data marketplace user interface, where buyers can apply IAS’s and DV’s pre-bid bot-mitigation services.

The Trade Desk’s UI describes the IAS fraud prevention feature as combining “predictive and real-time signals” to avoid invalid traffic “at the URL, app, IP and user-agent level.” But that can’t be the case if The Trade Desk isn’t passing along the user agent to IAS.

Meanwhile, The Trade Desk’s UI describes the DV fraud prevention feature as excluding impressions “where DV has previously evaluated and identified as running in browsers controlled by a nonhuman bot or from an invalid traffic source such as nonhuman data center traffic, hijacked device or mobile emulator.” It adds that this filtering also includes “impressions from General Invalid Traffic, including known bot/spider [and] known data center.”

A buy-side source said that, based on this description, DV should therefore be able to filter out impressions from declared bot user agents and IP addresses in real time.

And yet DV and IAS did not always successfully filter out these declared bot impressions, according to Adalytics – which suggests these pre-bid bot filtration tools don’t work as described in The Trade Desk’s UI.

DoubleVerify and IAS each posted blogs disputing the Adalytics report’s findings.

Notably, other screencaps of DSP user interfaces that buyers shared with AdExchanger, including from Amazon DSP and DeepIntent, did not contain references to IAS’s and DV’s tools conducting real-time filtering of known bots or blocking based on user agent.

The Trade Desk provided this statement: “Ad verification is an area where we use a combination of internal tools and integrated partner technologies. We will continue to evaluate and work closely with our partners to review performance and maintain our leadership in this area.”

But, regardless of whether the DSP facilitates pre-bid filtering based on user agent, verification providers should be able to access the user agent data on their own, said a former IAS employee, because that data is transmitted whenever a network request is made.

Model training

Several sources said verification platforms or DSPs could be deliberately letting some known bot traffic slip through in order to train their detection models on bot behavior and help them identify non-declared bots.

For example, the report discusses instances of The Trade Desk apparently advertising its products and services to bots. These could have been the DSP attempting to flag those impressions as invalid, said a person who works in the bot detection industry.

“They can’t sell that impression to any of their clients, but they can serve up one of their own random internal ads,” the bot detection expert said. “That would give them an opportunity to execute their JavaScript, potentially fingerprint that bot and then know when that bot or similar sessions are showing up.”

So there could be a reasonable explanation for why a DSP would serve its own ads to known bots. But what about the verification platforms?

An ex-IAS employee told AdExchanger that if verification companies were to deliberately serve some ad impressions to bots for testing purposes, they would need to make that clear in their contracts. And to this source’s knowledge, verification companies do not include such language in their contracts.

But regardless, multiple sources who spoke with AdExchanger said they doubted that training is enough of an explanation for why verification companies failed to detect well-known bots over a more than three-year period, as reflected in the report.

“Give them the benefit of the doubt: Maybe on the very first day you come across something, you don’t identify that it’s a bot correctly,” said the bot detection expert. “But then all of the rest of the impressions with that exact same fingerprint should be consistently detected.”

A sample explanation

Another possible explanation for why verification vendors are failing to catch declared bots could be because they’re sampling traffic rather than examining 100% of impressions, multiple sources theorized to AdExchanger.

For example, one ex-IAS employee claimed they observed the company running bot detection code on only 50% of traffic.

Problem is, buyers assume that their bot detection partners “work 100% of the time,” a brand media exec said, “because I pay a CPM on 100% of the impressions.”

However, according to the bot detection expert AdExchanger spoke with, neither sampling nor letting some bot traffic through for training purposes would explain the inconsistencies in bot detection that Adalytics observed over a three-year period.

“This was a detection failure,” the bot expert said. In other words, it appears the tech itself was to blame, rather than how the tech was applied.

IP addresses and VPNs

Several sources also said that the HTTP Archive bots’ IP addresses should have been a red flag, since they’re tied to known data center IP addresses that have been flagged by organizations like the Trustworthy Accountability Group (TAG).

But it’s possible that verification providers and ad platforms use outdated IP address tables. This could explain why Adalytics found targeted ads that seemed to mistake known bot data centers located in certain countries for people based in entirely different countries.

For example, the report describes a Hershey’s ad served to a URLScan bot, which is a web crawler that does not declare its bot status. The URLScan bot accessed a site called mydominicankitchen.com. The IP address associated with the bot in question was assigned to a data center located in Australia. But The Trade Desk and Index Exchange appear to have guessed the bot’s geolocation to be in Wilmington, Delaware and so served it a targeted ad based on that inference.

The last time the bot’s IP address was tied to a location in the US was nearly three years prior to when the Hershey’s ad was served, as per the Adalytics report.

IAS, meanwhile, did not flag this traffic as invalid, according to Adalytics.

If the platforms involved hadn’t updated their IP address tables during that time, it could explain the discrepancy.

Dodging data centers

Either way, serving ads to data center IP addresses is inherently wasteful, said Sander Kouwenhoven, CTO at Oxford BioChronometrics, a firm that specializes in online fraud prevention and user authentication. Kouwenhoven contributed to the Adalytics report.

“People don’t reside at data centers normally, except maybe the three guys in the operations room,” he quipped.

Why then are ads being served to bots affiliated with data centers?

Multiple sources AdExchanger spoke with said it’s possible that verification vendors, DSPs and SSPs are not shutting off ads for all IP addresses tied to data centers because they’re worried about blocking humans using VPNs. A VPN replaces a user’s real IP address with one sourced from a data center in another location, often a different country.

However, this wouldn’t explain why known bots like HTTP Archive that never share IP addresses with VPNs aren’t being filtered out, the bot detection expert said.

Will anything change?

So, there you have it: Another Adalytics report exposing holes in ad tech’s safety net. Is this the one that will finally break through and inspire change?

To put it mildly, there’s widespread cynicism that the industry remains incentivized to ignore the drumbeat of exposés. But most of the sources who spoke with AdExchanger agree change will have to begin on the buy side.

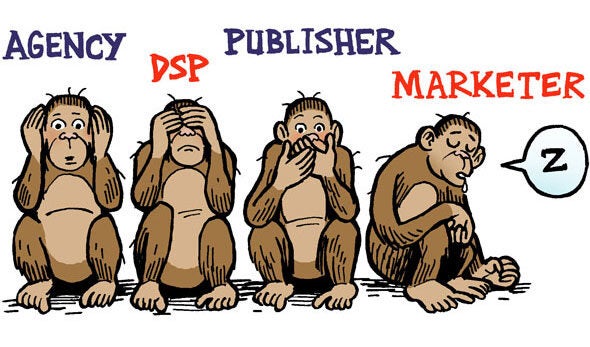

“Agencies have lost so much technical talent, they can’t police programmatic buys at any meaningful scale,” said a former media buyer. “Brands have even less technical understanding of how this stuff works.”

There’s a reason why the industry can’t seem to avoid serving wasted impressions to invalid traffic, the former media buyer added. “It’s not because of technicalities; it’s a lack of education and, frankly, a lack of desire to be educated.”

And brands shouldn’t take solace in makegoods, said Jay Friedman, CEO at ad agency Goodway Group, because refunds won’t solve the problem.

“You’re going to get your CPM back from the vendors,” he said, “but that doesn’t pay you back for the media, data costs and everything else.”

Publishers, meanwhile, could take initiative to eliminate bot traffic themselves, said Jason White, chief product and technology officer at The Arena Group. After all, when buyers demand clawbacks for invalid traffic, publishers are inevitably the ones who lose out.

The Arena Group claims to have blocked bot traffic to its sites using the IAB Tech Lab International Spiders and Bots list and by working with bot fraud protection provider DataDome. It now prevents ads from being served to roughly 40% of traffic to its site, White said, which corresponds to most estimates of online bot activity.

But, ultimately, advertisers control the purse strings, which means they control the incentives.

“Far too many people, especially the big money advertisers and old CPG brands, throw hundreds of millions of dollars out there and know jack about it,” said a brand media executive who spoke with AdExchanger. “If you really want to pressure [verification providers], just stop spending with them.”